Response to the Commission Action Letter

Recommendation 1:

In order meet the standards, the team recommends that the College develop and substantially implement an effective, systematic, and comprehensive institutional strategy closely integrating student learning outcomes with all planning and decision-making efforts and resource allocations. (II.A.1.c, II.A.2.a, II.A.2.b, II.B.4, II.C.2.) Specifically, this strategy should include:

- A more effective approach to assessing student learning outcomes at the course, program, and institutional levels on a regular, continuous and sustainable basis. This process must include outcome statements that clearly define learning expectations for students, define effective criteria for evaluating performance levels of students, utilize an effective means of documenting results, and the documentation of a robust dialogue that informs improvement of practices to promote and enhance student learning. (II.A.1.c)

- An approach that recognizes the central role of its faculty for establishing quality and improving instructional courses and programs. (II.A.2.a)

- Reliance on faculty expertise to identify competency levels and measurable student learning outcomes for courses, certificates, and programs, including general and vocational education and degrees. (II.A.2.b)

- Use of documented assessment results to communicate matters of quality assurance to appropriate constituencies. (I.B.4)1

- Engagement in the assessment of general education student learning outcomes. (II.A.3)2

The College should incorporate changes in the student learning outcomes assessment part of the institutional student learning outcomes cycle that currently includes an integrated planning process, and be expanded so that assessment data is used as a component of program planning processes already in place. As a major part of this strategy, a continuous, broad-based evaluative and improvement cycle must be prominent. All services, including instructional, student services, fiscal, technological, physical, and human resources should be considered and integrated.

Recommendation 2:

In order to assure the quality of its distance education program and to fully meet Standards, the team recommends that the College conduct research and analysis to ensure that learning support services for distance education are of comparable quality to those intended for students who attend the physical campus. (II.A.1.b, II.A.2.d, II.A.6, II.B.1, II.B.3.a)

Notes: 1The fourth bullet, identified in the Commission letter as Standard 1.B.4 seems to actually be Standard I.B.5, according to the text in the bullet point; 2there is no bullet point identified with the fifth citation above as II.C.2, so the discussion focuses on II.A.3, as cited in the fifth bullet point.

Recommendation 1, Bullet point 1 – Standard II.A.1.c

- A more effective approach to assessing student learning outcomes at the course, program, and institutional levels on a regular, continuous and sustainable basis. This process must include outcome statements that clearly define learning expectations for students, define effective criteria for evaluating performance levels of students, utilize an effective means of documenting results, and the documentation of a robust dialogue that informs improvement of practices to promote and enhance student learning.

Standard II A.1.c:

The institution identifies student learning outcomes for courses programs, certificates, and degrees; assesses student achievement of those outcomes; and uses assessment results to make improvements.

Specific actions taken to address recommendations on Standard II A.1.c:

- Conducted Professional Development activity in which faculty reviewed SLO data and are using it to drive instructional improvements through the institutional planning process.

- Instructional Deans increased their communication with faculty on SLO assessment and its link to the integrated planning system.

- Mandated division meetings as part of the 2013-2014 Gavilan College Faculty Association contract.

- Modified the IEC Program Review Forms to include a prompt connecting SLO assessment to the Program Plan.

- Developed an improved website with sorted lists of program plan budget requests and the Budget Committee rankings.

- Released new data tool (Argos®) that is used by faculty to discuss instructional improvement.

- Suspended all courses that had not been updated as scheduled, pending update approvals, including SLO's, though the curriculum committee.

- Developed and established SLO evaluation rubric as a part of the curriculum review process.

- Established Learning Council Instructional Improvement FIG for purposes of guiding SLO policies and procedures.

- Hired instructional improvement faculty support positions including SLO liaison.

- Increased SLO assessment at the course and program level.

- Received ACCJC Degree Qualifications grant which supported SLO improvements.

- Articulated courses through the C-ID process and programs through the TMC process, which included SLO review and modification.

Discussion:

In response to the Evaluation Report and recommendations, the college has made substantial improvements to the comprehensiveness and integration of Student Learning Outcome (SLO) assessments. As noted in the Evaluation Report, some of the Gavilan College faculty were not fully engaged in the process and the link between SLO assessment and the planning/budget cycle. While Gavilan College had built strong integrated planning and SLO systems, the significance and meaning of this work needed further emphasis. This insight led to a philosophical shift to an emphasis on instructional improvement at the course, program, and college level. This shift drove considerable advancements in the breadth and depth of SLO work and led to improved integration with planning and allocation. A group that included the academic senate chair, chair of the curriculum committee, Executive Vice President of Instruction, the Director of Institutional Research and other faculty members met to discuss how to increase faculty participation by making SLO work more meaningful and integrated (R1.01). They developed a plan to encourage and support SLO work and facilitated an event to include all faculty in the SLO improvement process.

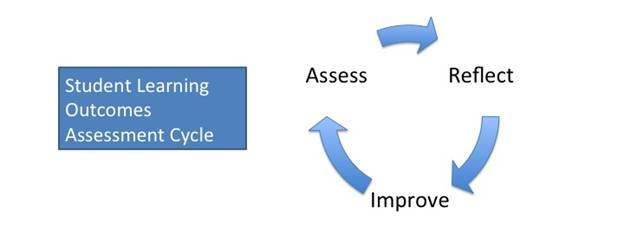

CHART 1: Student Learning Outcomes Assessment Cycle

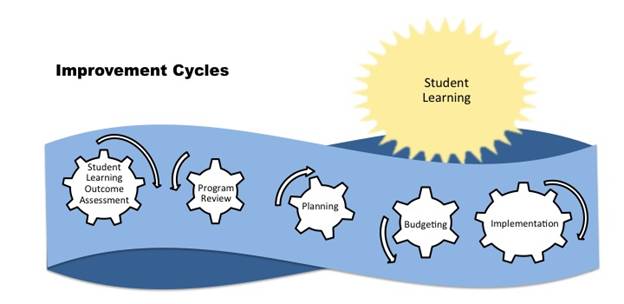

Chart 1 illustrates the Student Learning Outcome (SLO) assessment cycle now in use. The College has SLOs for all courses, programs, and non-instructional departments. These SLOs are assessed, and the results used to inform changes to courses, programs, and institutional planning. SLO assessments are linked to the program review, planning, budgeting and curriculum review processes. Chart 2 shows the connection that now exists in using the SLO Assessment Cycle, shown in Chart 1, as an input methodology to drive program planning and associated resource requests, thereby connecting the results from SLO assessment to resource allocation.

CHART 2: Improvement Cycles

In Fall 2013, faculty participated in a structured exercise to build awareness and skill in the use of SLO assessments for instructional improvement (R1.02). As a part of the mandatory professional development day training, groups of three to five faculty members reviewed SLO and other data to reflect upon what was working in their courses and what improvements could be made at the course, program, and institutional levels to strengthen student learning (R1.03). These discussions resulted in specific ideas for improvement. For example, in one group composed of faculty from the library, fine arts, and social science departments, an instructor decided to integrate library resources into particular courses to support student writing. Another group learned of the concurrent high retention and low performance in a particular course, and shared insights about the possible causes of these results and practices that instructors can use to monitor student progress throughout the term, such as instructor check-ins with students (especially those at risk of failing), encouraging office hour visits, and adding reviews before finals (R1.04).

Program plans are the component of the annual planning and budget cycle through which resources are requested to support specific Strategic Planning Goals, Institutional Effectiveness Committee recommendations, and SLO assessment results (R1.05). Each program defines the objectives it plans to accomplish each year and the activities expected to achieve these objectives. If an activity has an associated cost, a corresponding budget request is included (R1.06). Each program plan and corresponding budget request is reviewed and ranked by the respective deans and VP, and by the college's budget committee. Rankings are guided by a rubric, which includes a criterion for SLO assessment as a basis for the objective (R1.07). The budget committee uses the ranking scores to determine its recommendations for funding allocations. The Professional Development Day (PDD) faculty discussions stressed the link between assessment data and discussion of SLO's in the development of improvement plans (R1.08).

A second structured event was held on PDD in Spring 2014. The focus for Spring 2014 was to share and identify strategies for instructional improvement and prioritize strategies that can be scaled across courses and programs. These strategies will inform Fall 2014 program plan development.

Instructional deans have increased their communication with faculty on SLO assessment and its link to the integrated planning system. At divisional meetings in Spring and Fall 2013 they discussed the importance of assessment and improvement, and the link between SLO assessment and the development and ranking of annual program plans (R1.13). They also increased their outreach to faculty and programs that are not based on the main campus. For example, the Dean of Career and Technical Education engaged in discussion with the Drywall and Construction apprenticeship programs, which are located at their own facility in Morgan Hill. The Dean, together with the Institutional Researcher, met to conduct training and to support these programs' assessment and planning efforts (R1.16). As a result of these meetings, the drywall faculty modified several of the assessment reports, adding additional data from course and instructor evaluations. These updates led to one course supplementing hands-on projects with a workbook so that the students not only built the project, but also reviewed and interpreted the information. Some of the assessments were also used to update the equipment and procedures being used (R1.17).

Academic divisions and departments have placed an emphasis on conducting regular meetings, providing increased opportunities for dialogue about student learning improvement and professional development planning (R1.10). Mandatory division meetings are now a part of the Gavilan College Faculty Association contract. Additionally, all departments are now meeting regularly and including instructional improvement as an agenda item at each meeting. Agendas and minutes are forwarded to the Executive Vice-President (EVPI) (R1.09).

The program review process has been revised to strengthen the integration of SLO assessment with improvement, planning, and allocation cycles. In Fall 2013, the Institutional Effectiveness Committee (IEC) changed the program review template to reinforce the link between SLO and other assessment data and the development of planning and budget request items (R1.19). Each instructional and non-instructional program is reviewed on 3-5 year cycle. At this review, programs complete a self-study that reflects the progress made since the last review, issues facing the program, and plans for the future. Program representatives present data, including SLO data, to support their proposals and future plans. The IEC reviews each submission and highlights issues or concerns and/or requests for additional information. These issues are then conveyed to the program in writing and discussed in person with the program representative and the supervising administrator. The process culminates in recommendations for the program to implement (R1.20). Program review recommendations, as well as SLO data, contribute to the rankings of program planning resource requests in the budget process.

The College has also implemented technology solutions to strengthen the link between assessment and the development of program plan objectives and corresponding budget requests. The Management Information System (MIS) department has developed a website with sorted lists of program plan budget requests and the Budget Committee ranking. Departments can now easily access initial program plan requests, linked to the funding priority list for all campus areas (R1.21).

The College also recently purchased and implemented Argos®, which enables system data to be presented in a useful and user-friendly fashion. A data dashboard allows users to view the enrollment, FTES, success, and retention for courses or disciplines over the past five academic years (R1.31). The data are presented in both table and chart form. Other tools present data on course efficiency, enrollment, costs, and productivity. A dashboard compares distance and non-distance education sections on enrollment, success, and retention (R1.32). This data has prompted distance education instructional improvements. For example, the Distance Education (DE) Coordinator has used this tool to identify instructors with low success or retention rates and has approached them to develop strategies to facilitate student engagement.

There are indications that more programs are using SLO and assessment data to inform the development of program plan objectives than before. In academic year 2013-2014, 38 departments submitted program plans that identified at least one proposed objective that was informed by SLO assessment data. Two years prior, in academic year 2011-2012, only two departments had referenced SLO assessment in their program plans (R1.71).

Several Fall 2013 program plans illustrate the shift towards instructional improvement and the link between assessment data and planning and allocation. For example, the Math department identified inconsistent performance of students on learning outcomes across its 200-level courses. The pattern of student performance indicated that there might be advantages to creating multiple tracks of Algebra 2, one for Science, Technology, Engineering, and Math (STEM) students and another for non-STEM students; therefore a Math 242 course was created for non-STEM students. Initial data suggests that students are performing well in the Math 242 (R1.22).

For instructional programs, the development of SLOs is integral to the development of the course curriculum and pedagogy. The Curriculum Committee reviews submissions for new programs and courses as well as updates to existing programs and courses (R1.23). Course outlines are developed by faculty and then reviewed by faculty representatives on the curriculum committee. The course outline details SLOs, weekly objectives, and the instructional methodology that will be used to help students achieve the outcomes and objectives. Each proposed course outline is reviewed and discussed among faculty within the discipline and department. Following discussion, feedback, and adjustments, the department chair reviews the curriculum and forwards it to the area instructional dean. If approved by the dean, the course outline is considered by the curriculum committee, and if approved, is forwarded to the EVPI and the governing board for final approval (R1.24).

Each course outline is reviewed and updated at least once every three years. When the course outline is updated, the SLOs for the course are reviewed and assessed. In order to ensure that ALL courses are current with SLO assessment, the EVPI notified faculty in December 2013 that any courses that had not been updated as required would be suspended in the online class schedule and would need to be updated during Spring 2014 in order to be offered in Fall 2014 (R1.72).

Faculty have led the effort, through the curriculum committee, to provide more guidance on the development and assessment of SLOs. In Fall 2013, this led to a strengthening of the oversight and informational role played by the curriculum committee. The committee has adopted a rubric to serve as the basis for the committee's discussion, evaluation, and approval of the SLOs and PLOs included in proposed curriculum (R1.25). Further discussions are currently underway to review the quality of assessment prior to a course or program modification approval (R1.26).

The Academic Senate has also championed efforts to improve the quantity and meaningfulness of SLO assessment work, leading a dialogue about SLO assessment and student learning improvement in Fall 2013. The senate has fully voiced support for campus-wide instructional improvement and receives regular updates from the Office of Instruction (R1.27).

The Learning Council (LC) has led initiatives to improve student learning. At the LC, Focused Inquiry Groups (FIGS) were developed to address significant issues at the college. In Fall 2013, faculty from multiple disciplines developed a FIG on Learning Improvement. Part of the work of this Learning Improvement FIG has been modeling the use of data and information to improve student learning. The FIG has developed a series of data presentations and active workshops to train all stakeholder groups in the use of new data tools available to inform improvement discussions. The first workshop was held in Fall 2013 (R1.28). The group now serves as the college advisory committee for SLO policies and procedures and works with the SLO faculty liaison.

Reassigned time of 20% for two faculty members was approved in Spring 2013. These assignments are intended to improve the quality of student learning. The positions address faculty mentorship, professional development, and student learning outcomes. The SLO faculty liaison position began in mid-November 2013. The faculty liaison has met with the curriculum committee to initiate the work of aligning the curriculum revision process with SLO assessment (R1.30). The faculty liaison has provided training and assistance to faculty members working on SLO assessment.

Initial indications suggest the shift to instructional improvement and process modifications are contributing to a broadening and deepening of SLO assessment. The SLO assessment reporting website tracks each course and program's SLOs, assessment method, assessment results, and how the results are used (R1.33). The site has recorded a dramatic increase in the proportion of courses and programs regularly assessing outcomes since the Spring 2013 visit. To illustrate, the proportion of courses assessed rose from 68 percent to 87 percent, from Spring 2013 to Fall 2013, while the proportion of instruction programs assessed rose from 67 percent to 92 percent (R1.34). Multiple courses and programs have repeated the assessment cycle more than once. All non-instructional programs have been regularly assessing and reporting upon their assessments.

In late Spring 2013, the college was invited to be part of a pilot project from the Accrediting Commission for Community and Junior Colleges (ACCJC) to employ the Degree Qualifications Profile (DQP) in order to strengthen its student learning outcomes. The grant provided support to instructional programs to improve student learning outcomes and assessment in order to increase student success (R1.35). The Digital Media program, in particular, took advantage of this support to review their program and improve and revise its SLOs to establish a more systematic and purposeful pathway for Digital Media students (R1.36).

The college has had over 56 courses approved by the Course Identification Numbering System (C-ID), which means that their course outlines and their SLOs have been compared and aligned with the C-ID descriptors. In addition, redesigning instructional programs has necessitated the identification of new program-level SLOs. Three Transfer Model Curriculum (TMC) degrees have been fully approved, which means that all courses in this program area contain model course descriptors and learning outcomes. Nine more TMC degrees have been submitted for review, with several additional TMC degrees in the planning stages.

Faculty leadership in instructional improvement has encouraged in-depth and meaningful analysis of students' progress on learning outcomes. For example, English faculty met to discuss the assessment for a newly offered accelerated remedial English course. The common assessment found that students were not succeeding at the expected levels for several outcomes. As a result, the instructors agreed upon some important pedagogical changes: they created the exam and a grading rubric together, and will now provide students practice and strategies for answering all parts of their prompt (R1.37). The instructors noted the benefit of meeting in person to discuss assessment results and potential improvements. According to the lead instructor, one of the most important developments from the assessment was a commitment to conducting regular meetings, which are now taking place.

SLO-informed course modifications have also led to student learning outcomes performance improvements. For example, a History instructor noted that only 30 percent of his students achieved at least 70 percent on an outcomes assessment (R1.38). After participating in some professional development activities about reading apprenticeship and acceleration, the instructor implemented a "jigsaw" reading activity that led to dramatic increases in student performance on SLO assessments. Students also commented on how much more engaging the activity was in comparison to the previous method. The improvements and corresponding SLO assessment contributed to insights for the instructor: reading assignments need better structure and student-centered reading assignments are beneficial.

At the program-level, the Theater program SLO assessment was used to inform changes in that program. For some time, the Theater department had struggled with how to assess its overall program in a fruitful way. In Fall 2013, the lead instructor for the program developed a special theater production for students in the program as a new way to assess their progress on Theater program learning outcomes. The project allowed students more freedom to explore and develop their theatrical skills than had been possible in other assignments (R1.39). The production gave the instructor a meaningful and in-depth way of assessing students' progress on student learning outcomes. The unique assessment approach opened up the instructor's view of how assessment can be conducted in a meaningful and productive way. The instructor will repeat the assessment approach on a regular cycle.

Recommendation 1, Bullet point 2 – Standard II.A.2.a

- An approach that recognizes the central role of its faculty for establishing quality and improving instructional courses and programs. (II.A.2.a)

Standard II A.2.a

The institution uses established procedures to design, identify learning outcomes for, approve, administer, deliver, and evaluate courses and programs. The institution recognizes the central role of its faculty for establishing quality and improving instructional courses and programs.

Specific actions taken to address recommendations on Standard II.A.2.a:

- Faculty led and facilitated the Professional Development instructional improvement activity conducted in order to have faculty practice reviewing SLO data and using it to drive instructional improvements.

- Provided 20% reassigned time for three instructional improvement faculty liaison positions including one for SLO/ PLO activities.

- Developed and established SLO evaluation rubric as a part of the curriculum review process.

- Established of Learning Council Instructional Improvement FIG for purposes of guiding SLO policies and procedures.

- Added a peer evaluation component to faculty evaluation process, which increased faculty involvement in instructional improvement.

Discussion:

Course and program curriculum review is a faculty-driven process. New or revised course/program outlines, which include identified student learning outcomes, are developed by faculty in the discipline. The course/program outlines are reviewed at the department level and, if appropriate, are approved by the faculty member who serves as department chair. The outline is forwarded to the relevant instructional dean and then to the curriculum committee for review and approval. A technical review sub-committee reviews curriculum prior to the curriculum being placed on the agenda. The full curriculum committee reviews and approves proposed curriculum. This committee establishes and reviews the standards for all courses and instructional programs at Gavilan College.

All courses and instructional and non-instructional programs have identified SLOs and methods for assessing SLOs. For instructional programs, each new or modified course or program, including its SLOs, is reviewed and approved by the college's curriculum committee (see detailed description above). Each program is updated on a regular cycle, which necessitates a review at least once every three years. This curriculum process has been improved by the development and implementation of a SLO identification rubric (R1.25). This rubric has provided a more detailed guide for evaluating the appropriateness of proposed course and program SLOs. The SLO liaison is leading an effort to implement additional procedures so that the curriculum committee can provide more information and review for the college's SLO assessment efforts.

There is an inter-related system for coordinating the college's SLO assessment and reporting work. During the development phase of the SLO system, an advisory committee was created to establish policies and procedures and guide training efforts. The committee's work culminated in the development of SLO guidelines that were approved by the academic senate, administration, and board of trustees (R1.40). As SLO work progressed and a system was set in place, the advisory committee did not continue to meet. In response to the accreditation report and a need for further improvements in the SLO system, a SLO assessment advisory committee was reestablished in Fall 2013 as a Focused Inquiry Group (FIG) of the Learning Council -- The Instructional Improvement Focused Inquiry Group. This group met once in Fall 2013 to establish a future agenda and will address SLO policy and procedure improvements in Spring 2014.

Faculty led discussions regarding SLO assessment and instructional improvement. The academic senate and learning council have become more involved in discussions on course, program, and institutional improvement. The college underwent a shift in how it emphasizes and discusses SLO assessment and course and program improvement. Since the Spring 2013 accreditation visit, faculty have taken on leadership to strengthen the policies and procedures concerning SLOs development, assessment, reporting, and linkages to planning and allocations. For example, the professional development day activity and the departmental follow-up work was planned and implemented by faculty representatives including the academic senate chair (R1.12).

There are faculty assigned to positions in mentoring and SLO assessment, with a mission to enhance instructional quality (R1.41). These positions strengthen the capacity of faculty to lead and perform instructional improvements overall. The mentorship program will provide guidance to new full and part-time faculty, while the professional development position will centralize and strengthen the training options for faculty. In Fall 2013, the faculty SLO liaison announced his new role focused on advancing the college's SLO work. The liaison initiated work to improve the SLO system, and has provided individual support sessions for faculty. In Spring 2014, the liaison has offered training sessions and helped to revise the curriculum review process to improve the timing and quality of SLO assessment.

The faculty have also taken on a greater role in the evaluation of part-time faculty. The part-time faculty evaluation process includes classroom observations performed by trained faculty evaluators. It includes a pre- and post-meeting between the evaluator and the evaluee to determine goals for the observation as well as an overview of instructional strategies. Since starting this process, the feedback from faculty has been very positive and it has provided yet another avenue to share information about instructional improvement. For example, in the Aviation department, the faculty member reported that as a result of the evaluation, more field trips would be planned for students to provide a stronger experiential component for students. The instructor also modified labs to promote higher order learning. Previous labs required students only to accomplish a task. Now the student must investigate how to accomplish the task, and review the mistakes along the way.

The college conducts regular reviews of each instructional and non-instructional program through the work of the Institutional Effectiveness Committee (IEC). The committee, which is led by a veteran faculty person, has developed a collaborative, clear and rigorous process that is integral to the college's planning and allocation system. Each instructional and non-instructional program is reviewed on 3-5 year cycle. At this review, programs develop a program self-study that reflects the progress made since the last review, issues facing the program, and plans for the future. Program representatives present data, including SLO data, to support their proposals and future plans. The IEC reviews each submission and highlights issues or concerns and/or requests for additional information. These issues are then conveyed to the program in writing and discussed in person with the program representative and the supervising administrator. The process culminates in recommendations for the program to implement (R1.20). Program review recommendations, as well as SLO data, contribute to the rankings of program planning resource requests in the budget process.

The IEC has included as members at least three faculty per review year. The program review processes can result in recommendations to the program for improved completion, quality, and usage of SLO assessment. For example, the Business department faced several challenges with coordination and completion of curriculum, SLO assessment, and planning work. The program received two recommendations through the program review process to further assess the full complement of courses and programs and to use assessment data to improve program. The program's status update revealed progress on both recommendations (R1.42).

At the course level, several examples illustrate how enhanced SLO assessment efforts are prompting curriculum or pedagogical improvements. For a Disability Resource Center (DRC) Guidance course, previous assessments found that students were not meeting expectations on course student learning outcomes (R1.43). In response, the instructor began to incorporate online modules into the curriculum. Fall 2013 reassessments have suggested improvements on students' level of success on SLOs. A supplemental benefit of improvements has been that the instructor is able to provide valuable information to students about accommodations and resources available in the DRC as well as email the entire class about upcoming assignments.

An instructor in Kinesiology provides another example of how SLO assessment has been used to inform important modifications which contributed to improved student success. As a result of a detailed SLO assessment, the instructor found that students were not performing as well as expected on the course learning outcomes for KIN 20B (Bowling). In response, the instructor worked with the bowling alley to repeatedly set up spare situations so students could practice picking up spares. The instructor also posted videos to the online course shell to provide more detailed illustrations of particular skills. As a result of these improvements, the instructor saw increased student success on these outcomes the next time he assessed student performance (R1.44).

The curriculum and program review processes have developed through strong leadership and participation of faculty. The recent changes in curriculum and program review and increased faculty leadership will assure that the college will continue to rely upon its faculty to review and improve the college's instructional quality.

Recommendation 1, Bullet point 3 – Standard II.A.2.b

- Reliance on faculty expertise to identify competency levels and measurable student learning outcomes for courses, certificates, programs, including general and vocational education, and degrees. (II.A.2.b)

Standard II A.2.b:

The institution relies on faculty expertise and the assistance of advisory committees when appropriate to identify competency levels and measurable student learning outcomes for courses, certificates, programs including general and vocational education, and degrees. The institution regularly assesses student progress towards achieving those outcomes.

Specific actions taken to address Standard II.A.2.b:

- Curriculum committee developed a rubric to evaluate SLOs at the course and program levels.

- Provided release time for a faculty member to serve as SLO coordinator.

- Faculty increased involvement in peer evaluation process.

- SLO work illustrated increased involvement and

meaningfulness:

- ART 12B instructor used SLO assessment results to inform instructional improvement.

- Child Development department aligned course content, SLOs, and program outcomes were modified and aligned with the California Teacher Competencies.

Discussion:

Through the curriculum development process, course-level student learning outcomes are identified and aligned with the appropriate program-level and general education outcomes. This alignment is conducted as a part of each course outline submitted to the curriculum committee. On a regular cycle each course and instructional program is required to submit an update. Courses that are not updated within the update cycle are not offered in the class schedule. These updates require the responsible faculty member to review student learning outcomes (SLOs) and their alignment to program and General Education (GE) SLOs.

Since the Spring 2013 accreditation visit, the curriculum committee has increased its advisory role over SLO matters. With input from the academic senate, the curriculum committee has developed and implemented a rubric for the evaluation of the appropriateness of an SLO at the course- and program-level. The committee has also begun to discuss the role of the SLO liaison in the review of assessment quality. The faculty SLO liaison has proposed that an assessment review be a part of the curriculum review workflow. These discussions will continue to promote greater involvement by the curriculum committee in SLO assessment and improvement (R1.45). Alongside the developments in curriculum review, release time has been provided for a faculty member to serve as the SLO coordinator (R1.46).

Improvements in instruction have resulted from increased SLO assessment efforts. In Spring 2013, the ART 12B (Sculpture) instructor was able to use multiple methods such as direct observation, artwork critique, and an illustrated research paper to assess student performance on course-level student learning outcomes. The different methods produced varied assessment results and prompted improvements such as the development of alternative projects and the provision of cover letter examples (R1.48).

The Child Development (CD) program provides another example of the work of faculty that improves the meaningfulness of SLO assessments. In Fall 2013, the CD program, as a part of the California Community College Child Development Curriculum Alignment Project (CAP), extensively reviewed and aligned SLOs from eight Child Development courses. Child Development faculty along with the Child Development advisory committee met repeatedly to share strengths and weaknesses of the current SLOs and course content as well as develop plans for improvement. Through this process, course content, SLOs, and program outcomes were modified and aligned with the California Teacher Competencies. Modifications were submitted through the college's curriculum review process in Fall 2013. In Spring 2014, eight more Child Development courses will be reviewed and aligned.

Recommendation 1, Bullet point 4 – Standard I.B.5

- Use of documented assessment results to communicate matters of quality assurance to appropriate constituencies. (I.B.5 listed as I.B.4 in the Evaluation Report])

Standard 1.B.5

The institution uses documented assessment results to communicate matters of quality assurance to appropriate constituencies.

Specific actions taken to address Standard I.B.5:

- Added CCCCO Scorecard link on home page.

- Published updated Gainful Employment data in the course catalog and online.

- Established Learning Improvement Focused Inquiry Group.

- Implemented new data tool for faculty to discuss instructional improvement.

Discussion:

In their Evaluation report, the Evaluating Team found that Gavilan College met Standard 1.B. (p 19, Evaluation Report); however it was quoted as bullet-point 4 of Recommendation 1 (although incorrectly cited as I.B.4).

The college uses regular assessment reports to communicate matters of quality assurance. These reports include the College Factbook, Student Profile, Gainful Employment, Student Success, Distance Education, Assessment Distribution, and Student Success Scorecard reports (R1.49). As the result of a series of significant grant-funded initiatives, the Office of Institutional Research (OIR) regularly produces evaluation reports on particular interventions (R1.50). These data are shared across campus and through email in the form of research updates and posted on the public OIR website. The Director is a regular visitor to different constituency group meetings, where he presents data and discusses the meaning of results (R1.51). The Director also presents regular reports to the Board of Trustees and the community overall on a regular basis (R1.52).

The Public Information Officer (PIO) has worked to get more information about the assessment results to the public. For example, the Chancellor's Office Student Success Scorecard is now presented as a button on the college homepage (R1.53). Additionally, the gainful employment data for each identified program is posted online along with extensive collection of OIR reports. This information is also printed in the course catalog (R1.54).

The Learning Council serves as a forum for discussing data to inform dialogue and interventions (R1.55). In Fall 2013, as in previous terms, the Director of Institutional Research facilitated discussions of assessment data that led to suggestions for improvement (R1.56). Many of the Learning Council Focused Inquiry Groups (FIGs) use data in the development and evaluation of interventions. For example, the FIG that studied the establishment of a college hour relied heavily on data to promote the need for a weekly time period in which no classes were scheduled (R1.57). In Fall 2013, a Learning Improvement FIG was established to help expand the use of SLO assessment and other data for improvement and planning. The committee has met to address this issue and ways to foster improvement of student learning (R1.58).

Data are also an important part of the college's integrated planning system and are systematically incorporated into review and planning. Each instructional and non-instructional program conducts a program review on a regular basis. Program staff and faculty present a variety of data about their program, including success rates and the number of degrees granted, and SLO assessment data (R1.59). Program review participants provide data to support any contentions or proposals. For example, statements such as, our program is effective or we really need a new faculty person must be supported by data (R1.60). Each program review document and the included supporting data culminate in program plan objective proposals. The program review submissions are reviewed by the Institutional Effectiveness Committee (IEC), which develops specific recommendations and a resulting report. The IEC relies on supporting data in the development of its recommendations. The report, with recommendations and a summary of the submissions, is presented through the college's shared governance committees and then on to the college's governing board (R1.61).

The college conducted an activity in Fall 2013 that involved full time faculty, through which they reviewed and went through the process of using data to inform a cycle of continuous improvement. In groups, faculty members studied SLO and other data associated with a course and, based upon that data, suggested improvements for the course, program, and college. These discussion results were then passed on to departments to inform their program plan development and to the strategic planning committee for college planning (R1.62). Library faculty, for example, noted from multiple assessments the high demand for computer/laptop access and substantial increases in use of online database content. The department decided to continue to support the laptop program with a systematic replacement schedule and to look for ways to strengthen the database collection. In its program plan, the library department requested funding for both laptop replacement and additional funding for databases. It also made database funding a higher priority in its overall budgeting (R1.73).

This event also advanced the college's effort to make data more accessible and useful. The college has added dashboard tools to enable easier access to the data warehouse system. These tools are easy to use and respond to typical data questions, such as course or discipline FTES, success and retention rates (R1.63). As a part of the professional development day event, faculty gained experience with using the new tool to explore data and interpreting that data to develop improvements at the course, program, and institutional levels.

The college assesses the effectiveness of communicating information about institutional quality through an annual shared governance and planning survey. The survey asks staff and faculty about their knowledge of institutional performance and the use of data in program and college decision-making. While there is certainly a need for continued improvement, recent results indicate that a majority (54.1 percent) of college staff and faculty had "much" or "very much" data to inform planning and decision-making (R1.64).

Recommendation 1, Bullet point 5 – Standard II.A.3:

Engagement in the assessment of general education student learning outcomes. (II.A.3)

Standard II A.3:

The institution requires all academic and vocational degree programs a component of general education based on a carefully considered philosophy that is clearly stated in its catalog. The institution, relying on the expertise of its faculty, determines the appropriateness of each course for inclusion in the general education curriculum by examining the stated learning outcomes for the course.

Specific actions taken to address Standard II.A.3:

- Conducted General Education (GE) SLO assessment with input from a broad range of instructional faculty.

- Held GE SLO summit to process the results and plan future re-assessments.

Discussion:

Standard II.A.3 was not cited by the Evaluating Team, but it does appear as bullet-point 5 of Recommendation 1.

The college has a history of ongoing assessment, review, and improvement with regards to General Education (GE) outcomes. In Spring 2010, the college assessed General Education (GE) outcome achievement with a self-report survey administered to a representative sample of students. It found a relationship between reported SLO achievement and number of units completed (R1.65). These findings were presented and discussed across campus, and suggested that a more intensive review of the overall GE program was needed (R1.66).

In Spring 2012, a cross-disciplinary task force established by the academic senate coordinated a GE program review. They completed a self-study of the GE program and course composition of the GE pattern. The Institutional Effectiveness Committee recommended that the GE program representatives publicize the review and further examine students' progress on GE outcomes. In Fall 2013, the group submitted a review update addressing progress on the previous IEC recommendations. The program review update laid out a plan for conducting a general education summit to further assess and discuss the GE program student learning outcomes (R1.67).

A more intensive assessment was conducted to engage a broad group of instructional faculty in the evaluation of GE SLOs. The process, led by the instructional deans in collaboration with the department chairs, targeted instructors who were teaching GE identified courses. (Through the curriculum process, faculty proposing courses or course modifications are prompted to align the course, if appropriate, with the college's GE student learning outcomes.) In Fall 2013, a sample of instructors teaching courses aligned with particular GE outcomes was asked to provide an assessment of the progress of their students on the respective outcomes (R1.68). A total of 104 instructors (85 percent of the total sample) completed the assessment. The results were then summarized and discussed at a special summit of the college's department chairs (R1.69).

At the GE SLO summit, participants were grouped by GE outcome area (A-F) to discuss the results of the course-level instructor assessments. The groups identified those courses, programs, and assessment process where the data suggested needed improvements. For example, some groups observed that in the social/political GE area students had lower levels of reported proficiency in analytical outcomes. Several participants suggested the need for more cross-disciplinary instruction on some of these associated skills since they are a key to post-transfer success. Another group presented the need to update the outcomes in their area (R1.69).

Much of the discussion at the Fall 2013 GE summit focused on potential changes to the assessment process to make it more useful to instructors. Summit participants came up with ideas such as asking instructors to report the proportions of students in their courses that are at different proficiency levels, and noting what assessment method they use to arrive at their ratings. Another suggestion is to notify instructors from areas with lower reported proficiency levels that they will be asked to report assessment results and to discuss the findings in a group at the end of the term. A report summarizing the process and the findings was sent to all faculty and the suggestions are being further refined prior to implementation (R1.70). This in-depth assessment will be repeated on a regular basis and will be a part of preparation for the cyclical program review of the GE program.

Standard II.C.2

The institution evaluates library and other learning support services to assure their adequacy in meeting identified student needs. Evaluation of these services provides evidence that they contribute to the achievement of student learning outcomes. The institution uses the results of these evaluations as the basis for improvement.

Specific actions taken to address Standard II.C.2:

- Library used assessment data to develop program plan for Fall 2013.

- New instructional dean supporting the library will provide greater guidance on institutional processes.

Discussion:

Note: Standard II.C was not included in the bullet points of the recommendation, but was cited in the introductory text of Recommendation 1.

The college conducted an activity in Fall 2013 that involved full time faculty, through which they reviewed and went through the process of using data to inform a cycle of continuous improvement. Departments used the results of the PDD exercise in creating their annual program plans. For example, Library faculty noted from multiple assessments the high demand for computer/laptop access and substantial increases in use of online database content. The department decided to continue to support the laptop program with a systematic replacement schedule and to look for ways to strengthen the database collection. In its program plan, the library department requested funding for both laptop replacement and additional funding for databases. It also made database funding a higher priority in its overall budgeting (R1.73).

The organizational structure of the college has changed to better address issues on student learning and success, including the library. Prior to summer 2013, the library, tutoring program, distance education, among other programs were supervised by the Dean of Liberal Arts and Sciences. A new administrative position, Dean of Student Success, is being evaluated to determine if the College can improve student success by providing supervision and leadership for programs providing direct support to student learning (R2.06). The interim administrator has helped to lead and coordinate efforts to improve in the accessibility, the planning process, and quality of services provided to students.

Appendix 1 - Evidence for Recommendation 1:

- R1.01 - SLO Departmental Meeting minutes

- R1.02 - Professional Development Day prompts

- R1.03 - Professional Development Day photos

- R1.04 - Professional Development Day dialogue

- R1.05 - Gavilan College Integrated Planning process

- R1.06 - Program Plan sample - Biology

- R1.07 - Program Plan Ranking Rubric

- R1.08 - Email – dialogue in preparation of program plans

- R1.09 - Fine Arts meeting agenda

- R1.10 - Business Department agenda

- R1.12 - Fall 2013 Professional Development Day agenda

- R1.13 - Divisional Meeting minutes

- R1.16 - JPA Meeting agenda

- R1.17 - Drywall SLO reporting site

- R1.18 - Administrative Services Assessment

- R1.19 - IEC Program Plan forms

- R1.20 - IEC process

- R1.21 - Program Plan – ranking list

- R1.22 - Program Plan – Mathematics

- R1.23 - Curriculum Committee website

- R1.24 - Curriculum Committee process

- R1.25 - Curriculum new rubric

- R1.26 - Curriculum Committee minutes

- R1.27 - Academic Senate minutes

- R1.28 - FIG – SLO Assessment agenda

- R1.30 - Curriculum Committee minutes re SLO assessment

- R1.31 - Argos® System Data

- R1.32 - Argos® Distance and Non-Distance Ed Data

- R1.33 - SLO Assessment Site

- R1.34 - SLO Course Report

- R1.35 - Grant Description

- R1.36 - Digital Media Program Modification

- R1.37 - ENG 250P

- R1.38 - HIST 12 SLO report

- R1.39 - Theatre SLO Report

- R1.40 - SLO Guidelines

- R1.41 - GCFA Faculty Contract Liaison positions

- R1.42 - Business Department Status Review Report

- R1.43 - GUID 558A SLO

- R1.44 - KIN 20B SLO

- R1.45 - Curriculum Committee SLO Assessment/Improvement

- R1.46 - Liaison positions

- R1.48 - ART 12B SLO

- R1.49 - Office of Institutional Research

- R1.50 - Gears Evaluation

- R1.51 - Learning Council Minutes

- R1.52 - Board Agenda

- R1.53 - Public Information Office

- R1.54 - Gainful Employment Information

- R1.55 - Learning Council Mission Statement

- R1.56 - Learning Council Leaky Pipeline

- R1.57 - FIG College Hour Minutes

- R1.58 - FIG Development

- R1.59 - Program Review sample

- R1.60 - IEC Orientation PPT

- R1.61 - IEC Summary

- R1.62 - Professional Development Day Summary

- R1.63 - Learning Council (data dashboard tools)

- R1.64 - Shared Governance Survey

- R1.65 - GE SLO Report

- R1.66 - Academic Senate – GE Program Review

- R1.67 - IEC GE Program Review Update

- R1.68 - Assessment email

- R1.69 - Department Chair Minutes

- R1.70 - GE Assessment report

- R1.71 - SLO Assessment Data

- R1.72 - EVPI email

- R1.73 - Library Program Plan

Recommendation 2

In order to assure the quality of its distance education program and to fully meet Standards, the team recommends that the College conduct research and analysis to ensure that learning support services for distance education are of comparable quality to those intended for students who attend the physical campus. (II.A.1.b, II.A.2.d, II.A.6, II.B.1, II.B.3.a)

Standard II.A.1.b

The institution utilizes delivery systems and modes of instruction compatible with the objectives of the curriculum and appropriate to the current and future needs of its students.

Specific actions taken to address Standard II.A.1.b

- Academic Senate provided a forum for repeated discussion of the strengths and weaknesses of Distance Education (DE) instruction.

- Developed a DE Master Plan and Best Practices document.

- Developed a student authentication policy and effective contact policy.

- Released a new DE data tool (Argos®) used by faculty to inform instructional improvement.

Discussion:

Page 21 of the visiting teams' Evaluation Report states: "the College utilizes delivery systems and modes of instruction to meet a variety of student needs and has processes in place to assure the quality of those programs. (II.A.1b)" and does not cite any deficiencies regarding this standard. Although it is not clear why this standard was cited in the recommendations, we provide here a discussion of the College procedures and policies related to this standard, and from the perspective of distance education delivery (R2.24).

As described in the 2013 Self-Study report:

"When non-traditional delivery systems and modes of instruction are proposed for a course, the course outline, created by department faculty and approved by departments and area deans, is sent to the Curriculum Committee for consideration. Faculty members provide a detailed listing of course objectives and content for both new course proposals and proposed modifications to existing courses. The Curriculum Committee considers all aspects of each proposal including the appropriateness of the delivery system and modes of instruction. A link to the California Community Colleges Distance Education Regulations and Guidelines exists on the Curriculum Committee web page to provide guidance to faculty constructing new or revised course outlines (2A.13). In addition, the Distance Education/Technology committee, comprised of faculty, administrators, and staff, regularly meets to develop and update guidelines and best practices for distance education. The Gavilan College Distance Learning Course Outline Addendum (2A.21) has been recently updated. Resources for distance education and online teaching are made available to faculty on the Teaching and Learning Resource Center website (2A.62).

Course outlines are updated on a schedule, each one every four to five years. The current status of each course is displayed on the curriculum website (2A.6). At the time of a course update, the department faculty evaluates the effectiveness of the delivery methods used in their courses and makes modifications as necessary. Delivery methods for courses are indirectly evaluated during the instructor evaluation process (2A.9). A voluntary survey, "Evaluating Your Online Class" is provided to students taking online classes (2A.22). This survey addresses technical aspects of each class, specific aspects of the class, and the student's comparison of the online format with face-to-face classes. Students in learning communities also complete satisfaction surveys (2A.23).

Deans and department faculty have frequent dialogues about delivery systems and modes of instruction, particularly about the suitability of courses for distance learning. For departments favoring the use of distance education as a delivery method, discussions occur at the Curriculum Committee as part of the approval process. Similar discussions have occurred regarding self-paced computer-assisted instruction in basic mathematics (2A.25). Whereas these dialogues are department-driven, the dialogues related to learning communities have usually involved faculty from two or more departments before coming to the Curriculum Committee (2A.25). The Institutional Effectiveness Committee (IEC) also evaluates programs on a 3-5 year cycle, and reviews the integration of distance education into programs where relevant (R2.25).

Activities the College has undertaken since the team visit further have provided additional support for Standard II.A.1.b:

For the past three years, the Distance Education (DE) coordinator has worked with the DE Advisory Committee to develop policies and procedures. They have completed a DE Master Plan and Best Practices document (R2.02) as well as a student authentication policy and effective contact policy. These efforts have helped to standardize the quality of DE instruction.

New data tools are now being used to further examine distance education instructional quality. A recently developed Argos® data dashboard allows a user to select any course or discipline and compare the enrollment and success rates of DE vs. non-DE course sections (R2.05). This has prompted efforts for improvement. For example, the DE coordinator has used this tool to identify instructors or courses with lower success and retention rates. She then reached out to some of these instructors to offer guidance regarding best practices in online classroom management and effective student contact.

Standard II A.2.d

The institution uses delivery modes and teaching methodologies that reflect the diverse needs and learning styles of its students.

Specific actions taken to address Standard II.A.2.d

- Distance Education Advisory committee began development of a handbook and internal standards for delivering distance education.

- Office of Institutional Research has begun providing term-based Distance Education data reports.

Discussion:

The visiting team did not discuss or cite Standard II.A.2.d in their Evaluation Report, or note any deficiencies regarding this standard, therefore it is not clear why it is cited in the recommendation. Below we discuss College policies and procedures relevant to Standard II.A.2.d, as well as recent activities that further support this standard (R2.24).

Instructors of Distance Education classes participate in the same activities regarding student learning styles activities as instructors of traditional classes.

As described in the 2013 Self-Study Report:

"The College utilizes a variety of delivery formats and teaching methods to meet the learning styles of its students. Discussions at both department and department chair meetings have provided an avenue for information sharing on student learning styles and various delivery formats. Staff development day workshops and a desire to share information across campus have also been a benefit. Research on the First Year Experience and Supplemental Instruction has provided a foundation for building success.

Students are becoming more aware of their personal learning style through learning style inventories administered by instructors, the DRC, in guidance classes, workshops and through the newly established Student Success Center. This knowledge provides them the opportunity to select the delivery format that best fits their learning style.

Technology is used to assist both instructors and students. Workshops as well as one on one training in the staff resource center is readily available to all instructors and staff who want to utilize various delivery modes and teaching methodologies and students have the opportunity to select courses offered in a variety of delivery formats.

As courses are developed and updated the information on the curriculum forms requires the originator to indicate how students are assessed (2A.14, 2A.55). In order for a course to be approved it must include multiple means of assessment. The departments generally determine the delivery modes. Some departments offer courses in a variety of delivery modes therefore providing the student with the opportunity to select what works best for them. Classes are offered in a variety of delivery modes, including distance education, technologically enhanced instruction, project based service learning, and learning communities. Supplemental instruction and academic excellence workshops support instruction in math, science, and English.

The Course Outline of Record (COR) indicates which teaching methodologies have been selected for a particular class (2A.69, 2A.70). A review of these indicates that lecture, discussion, demonstration, small groups, guided practice, PowerPoint presentations, video/DVD and computer generated programs are commonly used. When courses are developed and as they are reviewed for updating the appropriate teaching methods are selected (R2.25).

In Spring and Fall 2013, the Distance Education Advisory Committee conducted a series of discussions on the status and direction of online education. These led to a broader understanding about the issues facing distance education and the development of a handbook and required standard for delivering distance education (R2.03).

To inform discussions on the patterns and effectiveness of distance education (DE), the Office of Institutional Research has begun providing term-based DE data reports. These reports detail enrollment and success patterns in distance education offerings. The reports are provided to the DE Coordinator, are presented to the DE Advisory Committee, and have been shared as a part of the broader campus dialogue described above (R2.04).

Changes to the organizational structure of the College have been proposed as a possible improvement in addressing issues on student learning and success, including the area of distance education. Prior to summer 2013, the library, tutoring program, distance education, among other programs were supervised by the Dean of Liberal Arts and Sciences. A new administrative position, Dean of Student Success, is being considered in an effort to improve student success by providing supervision and leadership for programs providing direct support for student learning (R2.06). An interim administrator now oversees several support services and distance education. The interim administrator has helped to lead and coordinate efforts to improve in the accessibility and quality of services provided to distance students.

Standard II A.6

The institution assures that students and prospective students receive clear and accurate information about educational courses and programs and transfer policies. The institution describes its degrees and certificates in terms of their purpose, content, course requirements, and expected student learning outcomes. In every class section students receive a course syllabus that specifies learning outcomes consistent with those in the institution's officially approved course outline.

Specific actions taken to address Standard II.A.6

- Developed written process to ensure every DE student receives their course syllabus

- Developed a Distance Education Faculty Handbook

Discussion:

The visiting team did not discuss or cite Standard II.A.6 in their Evaluation Report, or note any deficiencies regarding this standard, therefore it is not clear why it is cited in the recommendation. Below we discuss College policies and procedures relevant to Standard II.A.6, as well as recent activities that further support this standard (R2.24).

Gavilan College assures that all students and prospective students, including distance learning students, receive clear and accurate information about educational courses and programs and transfer policies. The institution describes its degrees and certificates in terms of their purpose, content, course requirements, and expected student learning outcomes. In every class section students receive a course syllabus that specifies learning outcomes consistent with those in the institution's officially approved course outline.

As described in the 2013 Self-Study report:

"Degree and certificate information, including Program Learning Outcomes, is listed in the Gavilan College Catalog for students and prospective students to review. To ensure that this information is accurate, many groups and individuals on campus provide input; including the catalog production team, the enrollment specialist, area deans, and department chairs.

The academic deans review the course syllabi to verify that all information is accurate and that they contain the Student Learning Outcomes for that course. All students enrolled in classes receive a copy of the syllabus for each course. Many instructors also post the syllabus online. Student Learning Outcomes are a part of the Course Outline of Record and are reviewed by the Curriculum Committee on a four to five year cycle.

Gavilan College has implemented a program called Degreeworks that helps students track degree completion on-line, through their own portal. Degreeworks clearly lists courses that have been completed and those still in progress. This allows students, at any time, to be able to assess the specific timeframe needed to achieve their educational goals. With the implementation of Degreeworks, needed coursework and majors are clearly identified to help students meet educational goals. The system takes existing curriculum and integrates it with the student's specific pathway and states what is still needed to complete degree objectives. Degreeworks provides historical insight and reflects the most current information with all curriculum updates.

The compilation of degrees and certificates in the college catalog is reviewed by the catalog production committee, made up of a cross section of all areas on campus: admissions and records, management information systems, counseling, liberal arts, technical and public services, noncredit, community education, disability resources, curriculum, and enrollment management. A format is agreed upon and used consistently throughout the catalog.

Curriculum changes after the changes are approved by the Curriculum Committee, the Board of Trustees and the Chancellor's Office prior to being included in the catalog. The curriculum website is updated with the most current versions of the course outlines, which include Student Learning Outcomes for each course (2A.69, 2A.70). As new and modified certificates and degrees are approved, those changes are included in the online catalog and Degreeworks. The printed catalog is updated every two years.

All courses are reviewed every four to five years. At the beginning of every semester a list of courses that are due to be updated is posted on the curriculum website. Course updates are faculty driven: faculty writes courses which are taken to the curriculum committee for approval. The courses must then be approved by the Gavilan College Board of Trustees. Lastly, the curriculum specialist submits the changes to the Chancellor's Office Curriculum Inventory for approval. Course outlines are kept up-to-date by the curriculum specialist who maintains course information in the Banner database as well as the curriculum website (2A.13). The College ensures that all sections adhere to the course objectives through the oversight of departmental chairs and deans." (R2.25)

Information about Distance Education courses is clear and accurate, with descriptions of SLOs specified in the syllabus and the course outline of record (R2.14, R2.15).

Distance Education instructors follow the same procedures as face-to-face instructors in reviewing and verifying syllabi: they follow written requirements for the course syllabus (R2.16, R2.17) and use the verification form to ensure that the syllabus is reviewed by the area dean prior to the start of the semester.

The Distance Education Committee has developed a process to verify that every student has received the course syllabus that includes SLOs. They have created a Distance Education Faculty Handbook (www.gavilan.edu/tlc/facultyhandbook2014.pdf ) which contains, among other information, a protocol which will go into effect for fall 2014. The protocol (which starts on page 6 under "important policies") will ensure that all students taking an online or hybrid course have received a copy of the course syllabus that includes SLOs. The protocol requires that the instructor open a portion of their online course to make the syllabus and course policies available up to 5 days prior to the beginning of the semester. The protocol then describes how to make the syllabus viewing a check-in activity for the course, with the instructor pulling a report to make sure all students have completed this check in activity. (R2.18, R2.19, R2.20, R2.21, R2.22, R2.23).

NOTE: Standards II.B.1 and II.B.3.a are addressed together in the following section.

Standard II.B.1

The institution assures the quality of student support services and demonstrates that these services, regardless of location or means of delivery, support student learning and enhance achievement of the mission of the institution.

Standard II B.3.a

The institution assures equitable access to all of its students by providing appropriate comprehensive and reliable services to students regardless of service location or delivery method.

Specific actions taken to address Standards II.B.1 and II.B.3.a:

- Conducted service review examining support services and their availability to distance students.

- Conducted evaluation survey and focus groups with DE students regarding effectiveness and suggested improvements for support services.

- Results of the studies were directly provided to support programs.

- Support programs developed responses to the information collected.

- New procedures for online services embed service evaluation in the service itself.

- Office of Institutional Research has begun providing term-based Distance Education data reports.

- Released a new DE data tool (Argos®) used by faculty to inform instructional improvement.

Discussion:

Distance education (DE) enrollment has grown steadily at Gavilan College over the past six years (R2.01). The number of instructors using some form of distance technology in their instruction has correspondingly increased. This growth prompted additional assessment of the support services available to DE students.

The College conducted a study to better understand the availability and effectiveness of distance support services. This study, conducted by the Office of Institutional Research, was an addition to the regularly conducted DE evaluative data collection. The study included a service review and online focus groups, which supplemented the online student survey that is administered each term. For the service review, a list of Gavilan College support services and their respective service components was developed. Representatives for each program then verified the accessibility of the service components for students not able to come to a physical campus. The Institutional researcher independently verified the information wherever possible. This review identified several service components that did not seem to have distance options. For example, general tutoring was not available online or over the phone for students who were not able or interested in coming to any of Gavilan College site locations.

The service review findings prompted an immediate discussion of how to implement these service components in a way which would serve DE and non-DE students equitably (R2.07). Each area in which a missing service was identified developed a plan for improvement or provision of said service. For example, general tutoring, as mentioned above, was not available to DE students. A small task force of faculty and the DE Coordinator developed a plan for offering off-site online synchronous tutoring. It was decided that CCC Confer®, a web-conferencing technology used by other community colleges, would be an effective tool for offering online tutoring. The college quickly procured needed equipment to facilitate a pilot online tutoring service. The department developed forms and a tutor training process in preparation for the launch of the service (R2.08). Tutor training was conducted for six tutors in Fall 2013 and the first sessions were offered in Spring 2014.

In addition to the service review, the DE student services study also produced findings on the effectiveness of the currently offered distance services and prompted ideas about how to improve services for Gavilan College DE students. Each term, all distance education students are surveyed to assess the quality of their DE educational experience. In Spring 2013, another series of items was added to assess students' experience with support services. An interactive online focus group was conducted with students from a small sample of distance education courses. The combined methods, in general, found that students who participated rated the corresponding support service highly. There were, however, some individual areas identified by students that needed further improvement (R2.01).

The service review and evaluative data were summarized in a study report and presented to the college's Deans' and Student Services' Councils (R2.10). Specific service areas, identified in the study as needing improvement, were contacted directly to convey the results of the study. In several cases, the findings of the study informed the program-planning process and led to specific changes for improvement. For example, the Financial Aid department added a program plan objective targeting improved services to non-in-person students. The activities to achieve this objective included increasing the checking of the financial aid email and hiring a new financial aid tech position whose job focused on off-site and non-in-person service. Now they list multiple FA phone numbers on the DE website, so that students can communicate with someone who's at their desk (R2.11).

Annually, the accessibility and quality of distance support services is systematically evaluated through the Distance Education report and the student survey. As was done in Fall 2013, findings are passed directly to support programs and their supervisors to promote continuous improvement. In addition, each distance education support service now directly integrates evaluative methods into the service. For example, each student who participates in an online tutoring session is asked to complete a brief survey on the quality of the service (R2.12).

The DE program, like all instruction and non-instructional programs, undergoes periodic program review. To additionally increase support service program accountability, the Institutional Effectiveness Committee will now include DE accessibility and quality prompts on the review template for all support programs (R2.13). This change ensures that support programs will be required to continually review and improve DE support services.

Over the Summer and Fall 2013, the administrative and student services departments also further strengthened their processes for assessment and improvement. Both groups reviewed their current program level SLOs and assessment methods. An initial meeting for both areas, facilitated by the Institutional Researcher, was followed by individual meetings with program representatives (R1.18). Directors and staff met with the Institutional Researcher to update the assessment methodology. These groups committed to use their findings and other relevant data to have a broad and documented discussion about the development of their respective program plans.

To inform discussions on the patterns and effectiveness of distance education (DE), the Office of Institutional Research has begun providing term-based DE data reports. These reports detail enrollment and success patterns in distance education offerings. The reports are provided to the DE Coordinator, are presented to the DE Advisory Committee, and have been shared as a part of a broader campus dialogue (R2.04).

New data tools are now being used to further examine distance education instructional quality. A recently developed Argos® data dashboard allows a user to select any course or discipline and compare the enrollment and success rates of DE vs. non-DE course sections (R2.05). The DE coordinator has used this tool to identify instructors or courses with lower success and retention rates and offered guidance regarding best practices in online classroom management and effective student contact.

Together, these efforts have provided important information on the accessibility and quality of support services available to Gavilan DE students. Findings from these efforts have prompted improvements to strengthen current services. Lastly, mechanisms are in place so that this evaluative data will be regularly collected and reviewed as a part of the college's integrated improvement cycle.

Appendix 2 - Evidence for Recommendation 2:

- R2.01 - Distance Ed Report

- R2.02 - Distance Ed Master Plan

- R2.03 - Academic Senate Resolution

- R2.04 - Distance Ed Update Fall'13

- R2.05 - Argos® Screenshot

- R2.06 - Dean of Student Learning Job Description

- R2.07 - Distance Ed – Dean's Council Agenda

- R2.08 - Tutoring – Forms/Training

- R2.09 - Distance Ed Support Service Report

- R2.10 - Student Services Meeting

- R2.11 - Financial Aid Program Plan

- R2.12 - Online Tutoring Survey

- R2.13 - Proposed Revised IEC Forms

- R2.14 - ANTH 3 Fall 13 Course Outline

- R2.15 - ANTH 3 Fall 12 Course Outline

- R2.16 - LAS Procedures 2013

- R2.17 - Course Syllabus Verification

- R2.18 - DE Handbook 02-07-14

- R2.19 - Samples of Online Course Syllabus

- R2.20 - DE Agenda 02-06-14

- R2.21 - Syllabus Check-In Activity Report

- R2.22 - CSIS-DM 85 Course Outline

- R2.23 - ENG 1A Course Outline